What is LLMs.txt file?

In the rapidly evolving world of AI-driven search, the way websites are discovered, understood, and cited by large language models (LLMs) is changing. Traditional SEO techniques—sitemaps, metadata, robots.txt—are no longer enough on their own. A new standard, LLMs.txt, is emerging to help bridge the gap between human-readable web content and AI-friendly structure.

In short, LLMs.txt is a lightweight, Markdown-based text file placed at the root of your domain (e.g. https://yourdomain.com/llms.txt). It acts as a curated guide or “roadmap” telling AI systems: “These are the pages on my site that matter most; here’s how I’d like you to treat them.”

Unlike robots.txt, which is about permissioning and crawler control, LLMs.txt is about suggestion, guidance, and prioritization. It helps LLM-powered systems (e.g. ChatGPT, Claude, Perplexity, AI search engines) find and digest your key content more reliably.

Because AI systems often use real-time web queries (rather than full indexing), they may skip over deep content buried in complex UI or behind heavy JavaScript logic. LLMs.txt gives them a clean, direct path to your best content.

Origins and current Status

The idea of LLMs.txt was popularized by Jeremy Howard of Answer.AI, as a proposal for how content owners might better guide AI models at inference time. It complements—but does not replace—existing web standards.

As of 2025, adoption is still in early stages. Major LLM providers are cautious, and Google currently does not rely on LLMs.txt for its systems. Some sources even compare LLMs.txt to the (now largely ignored) “keywords” meta tag in terms of speculative hype. That said, some platforms have begun offering built-in LLMs.txt support (e.g. Yoast SEO) to help site owners prepare.

In practice, as of now Anthropic is the only major AI provider known to support LLMs.txt in their crawling or ingestion pipeline. But many tech and marketing companies believe it is wise to prepare early, anticipating wider adoption.

How does LLMs.txt work (Technically)?

LLMs.txt works by offering a curated, structured index of your site’s most relevant pages, along with optional descriptions and guidance. The file is written in Markdown so it remains both human- and machine-readable.

Here’s a simplified example:

## example.com

Official site of Example Co.

## Products

- [Product A](https://example.com/product-a): Our flagship widget.

- [Product B](https://example.com/product-b): Advanced widget for power users.

## Docs & Tutorials

- [Getting Started](https://example.com/docs/getting-started): Setup guide.

- [FAQ](https://example.com/docs/faq): Common questions answered.

When an AI system (or agent) sees this file, it may use it to:

- Select what content to fetch — avoid irrelevant pages, skip unimportant sections.

- Assign priority or weight to pages you care about.

- Reference how to cite or summarize content (if you include notes or guidance).

- Exclude content you don’t want to appear or be misused.

- Optionally, use a fuller version called

llms-full.txt(which can embed full content) if your documentation is manageable in size.

Because LLMs have context window limits, giving them jump-off points helps. Rather than crawling your entire site’s HTML, they can start with the “best bits” you selected.

That said, it’s not a guarantee: different AI systems may interpret the file differently, or ignore it entirely (especially for non-web-connected models).

Benefits (and Risks) for Website Owners

✅ Key Benefits

- Better AI Visibility & Attribution

You increase the chance your brand, products, or content are surfaced in AI-powered results, and properly cited. - Control Over Messaging and Content Use

You can nudge AI away from pages that are outdated, promotional, or off-brand, while highlighting your strongest content. - Cleaner AI Parsing

Reduces the risk of AI misinterpreting navigation menus, scripts, or UI elements by directing models to meaningful content. - Future-Proofing

As AI search and conversational assistants become more central, early adoption of LLMs.txt can give you modest but important lead over competitors.

⚠️ Risks & Limitations

- No guaranteed adoption or effect — AI providers might ignore or partially support LLMs.txt, or treat it as a weak signal.

- Maintenance burden — As your site evolves, you must update the file to stay accurate.

- Overconfidence hazard — Too rigid instructions might lead models to misprioritize or omit context not listed.

- Size and complexity constraints — Very large sites may struggle either exceeding context windows or having too many entries.

- Misuse or omission — Leaving out important content by mistake could hurt AI-based discovery.

Some critics suggest that LLMs.txt is more hype than substance at the moment, pointing out that we don’t yet have robust metrics or large-scale field experiments proving concrete ROI.

How to build an LLMs.txt file (Best Practices)?

If you decide to adopt LLMs.txt for your site, here’s a recommended approach:

- Select your priority pages

Start with your hero content (landing pages, key products, documentation, FAQs, cornerstone blog posts). - Write helpful descriptions

Use concise summaries in your links to contextually orient AI. - Use sections and organization

Group content by theme (Products, Docs, Blog) so AI models can navigate hierarchy. - Exclude or de-prioritize weaker pages

Optionally note “disallow,” “ignore,” or “deprecated” content you don’t want AI to use. - Consider a

llms-full.txt

For documentation sites, a fuller file embedding all content may reduce back-and-forth fetching. - Upload to site root and test

Place it in/llms.txtso thathttps://yourdomain.com/llms.txtis accessible. Then validate formatting, verify link correctness, and optionally use testing tools. - Monitor & iterate

Over time, adjust the file as content shifts. Track AI-derived traffic or mentions to see if your positioning improves.

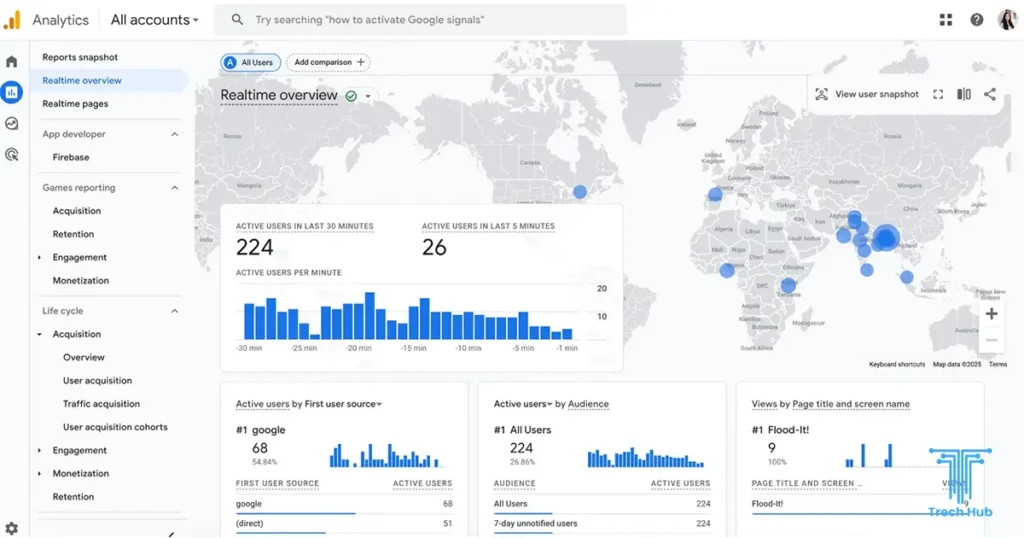

How Trech Hub helps you leverage LLMs.txt & AI Search

At Trech Hub, we recognize that the AI-powered era of search is no longer on the horizon—it’s here. As generative engines (ChatGPT, Claude, Gemini, Perplexity) become how many users get answers, brands must adapt. That’s why we incorporate GEO (Generative Engine Optimization) and LLMs.txt best practices into our offerings. Here’s how we help:

- Strategy & consultancy

We audit your content architecture and help you select which pages should go into your LLMs.txt, balancing marketing objectives and AI visibility. - Technical implementation

Our team can generate and deploy your LLMs.txt (and optionally llms-full.txt), integrate it into your CMS or static site generator, and maintain it over time. - Testing & validation

We validate file syntax, test AI ingestion, and benchmark before/after visibility in AI-powered search platforms. - Holistic AI SEO integration

We don’t stop at LLMs.txt. We align your existing SEO, schema markup, content structure, internal linking, and AI-aware content strategy so your site speaks to both humans and - Ongoing monitoring

Over time, we measure whether your most vital pages are being surfaced in AI results, track snippets and attributions, and iterate.

By making LLMs.txt part of your AI search toolkit, you gain a competitive edge in an era when many sites still rely only on classic SEO. With Trech Hub’s support, you’re not just future-proofing—you’re positioning for growth now.

Conclusion

LLMs.txt is not a silver bullet—but it is a powerful, low-overhead tool to help guide AI systems toward your most important content. While adoption is still early and uncertain, smart organizations are already experimenting. As AI-powered search and content consumption proliferate, having a thoughtful LLMs.txt gives you influence over how your brand is represented, cited, and understood in generated answers.

If you’re interested in integrating LLMs.txt for your website, or want a full AI search optimization strategy, Trech Hub is ready to help. Drop us a line—we’d love to audit your site, get your files live, and ensure your content doesn’t get lost in the AI shuffle.